Deep Learning is a Mandate for Humans, Not Just Machines — Andrew Ng

Yes, Deep learning is a next big thing. Yes, AI is changing the world. Yes, It will take over your jobs. All fuss aside, you need to sit down and write a code on your own to see things working. Practical knowledge is as much important as Theoretical knowledge.

There are many deep learning frameworks which make it really easy and fast to train different models and deploy it. Tensorflow, PyTorch, Theano, Keras, Caffe, Tiny-dnn and list goes on. Here is the great comparison for those want to know pros and cons of each one.

We will focus on Tensorflow. (For all the great debaters out there, I’m not a supporter of anyone. Turns out tensorflow is relatively new with really great resources to learn and most of the industries seems to use it.) I’m learning tensorflow from other resources, so I will try to merge them here in best way possible. This will surely help those who haven’t used Tensorflow before, I can not say anything for others. Though it is assumed that you all have basic under standing of Neural Networks, Loss functions, Optimization techniques, Backpropagation, etc. If not I suggest you go through this great book by Michael Nielsen. I will also be mentioning more often other libraries like numpy, sklearn, matplotlib, etc.

Constants, Variables, Placeholders and Operations

Constants: Here constants has same meaning as in any other programming language. They stores constant value. (Integer, float, etc.)

|

|

Where will you be using constants? Value which are not supposed to change! Like number of layers, shape of the weight vectors, shape of each layer, etc. Some of the great constant initializers are here (most like numpy). Thing to notice is that you can not even get a value of tensor until you initialize a session. What is session? We will get to it.

Variables:

Variables are those, which will be updated in Tensorflow graph. For example: Weights and biases. More about variables here.

|

|

Placeholders:

Placeholders are as name suggest reserve space for the data. So, while feed forwarding you can feed data into network through placeholders. Placeholders have defined shape. If your input data has n-dimensions, you need to specify n-1 dimensions and then while feeding, you can feed data into batches in the network. More about placeholders here.

|

|

Operations:

Operation are basic function we define on variables, constants and placeholders. More about operations here

|

|

Session

Session is a class implemented in Tensorflow for running operations, and evaluating constants and variables. More about session here.

|

|

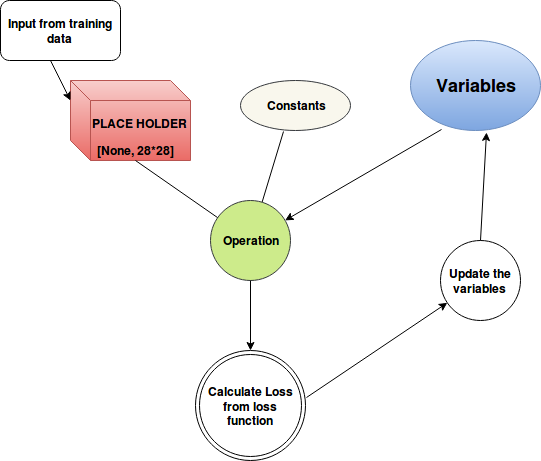

How Tensoflow works?

What are the main steps of any machine learning algorithm in Tensorflow?

-

Import the data, normalize it, or create data input pipeline.

-

Define an algorithm — Define variables, structure of the algo, loss function, optimization technique, etc. Tensorflow creates static computational graphs for this.

-

Feed the data through this computation graph, compute loss from loss function and and update the weights (variables) by backpropagating the error.

-

Stop when you reach some stopping criteria.

Here is vary simple example for multiply operation on two constants.

And here is other simple computation graph.

That’s it for now!

Source Code:

You can find source code for this assignment on my github repo.

References:

-

Really good and detailed blog.

Next we will see Linear and Logistic Regression.

Hit ❤ if you find this useful. :D